As organisations accelerate AI adoption, risk management needs to keep up

Published by Dimitri Vedeneev, Executive Director Secure AI Lead, and Henry Ma, Technical Director, Strategy & Consulting

What often gets lost in the discussion about Artificial Intelligence (AI) is that AI is not new.

In fact, the term ‘AI’ has been in use since the 1950s and has existed in various forms since, maturing through machine learning and robotic process automation (RPA) capabilities.

But what is relatively new is the accessibility and adoption of AI as a business and consumer tool at scale. In the past, machine learning was mainly used by data scientists and analysts to analyse large amounts of data. Now, Generative AI (GenAI) technologies and tools like ChatGPT, Gemini and CoPilot have put AI into the hands of everyone in almost every workplace.

This is causing organisations to rethink how they work. It brings new risks and accelerates existing ones which need to be managed operationally. It also brings many opportunities that can be harnessed strategically as organisations redefine their workflows and human value chains.

GenAI’s Evolution: From Chatbots to Agentic Systems

GenAI burst onto the scene in late 2022 with the release of ChatGPT – and it’s quickly evolved into new iterations since. Each evolution is ‘smarter’ and more autonomous, with each new version expanding the risk surface when used.

The first evolution involved Large Language Models (LLMs), which demonstrated impressive general language capabilities by training on enormous volumes of information. As a result, LLMs often lack specific contextual or domain-specific knowledge. The most common type of LLM is the ChatBot. Here the risks an organisation needs to consider include:

- A reliance on inaccurate responses from GenAI application (e.g. where AI ‘hallucinates’ the response due to insufficient data, see example: Air Canada ordered to pay customer who was misled by airline’s chatbot)

- Accidental data leakage by users uploading sensitive data to the GenAI application

- The user maliciously exploiting the chatbot via prompts to gain unauthorised access or capabilities.

To improve reliability and accuracy, the next evolution of GenAI introduced Retrieval-Augmented Generation (RAG) AI, enabling organisations to integrate their own corporate data into LLMs to support more business productive use cases.

- CyberCX example – CyberCX has worked with a client in the construction industry that started piloting AI ChatBots to read their policy and contract libraries, to assist staff in managing building contracts. While this enhances utility, it also expands the data leakage risk to sensitive data holdings as well as the risk of that data being corrupted or modified through data poisoning attacks.

As GenAI improved in content creation, the next evolution started to use AI to ‘do’ tasks or ‘make’ decisions with Agentic AI, which has become the current frontier for AI adoption in the workplace. Agentic AI sees AI Agents assuming identities and given access to systems to execute actions, which can significantly increase the consequences of its actions should something go wrong.

- For example, taking the Chatbot use case further, companies are looking to use AI Agents in customer support settings, connecting them up to access customer profiles and executing changes or transactions for them. With greater access to systems and data, companies will need to carefully protect machine identities to prevent credential exploitation and monitor AI agent behaviours for anomalies.

Furthermore, training AI Agents on biased data can lead to unfair or discriminatory decisions being made, leading to a new category of ethical risk. Examples of this are less common, but we expect to see them occur more frequently as organisations adopt higher risk use cases like loan applications or job candidate screening where inherent, uneven distributions in socio-economic attributes from the training data could become perpetuated and amplified in the AI Agent’s decision.

Underneath all this adoption is a new type of third-party risk around content ownership. While traditional third-party risk management and supply chain compromise remains ever more important (as most organisations choose to adopt LLMs developed by an AI vendor), questions around ownership of the generated content are increasingly being asked like:

- Who owns the content generated by AI?

- Who becomes liable if the content infringes copyright because the LLM is trained on copyrighted materials?

- What rights do vendors have to use user inputted data for retraining?

Striking the Balance: Opportunity vs. Risk

While AI adoption introduces these risks, they are balanced against clear growth opportunities for organisations to exploit. Broadly, these fit into two categories:

- Optimisation of front, middle and back-office functions to enhance process and technology efficiencies and improve customer experience.

- This can range from 24/7 AI assistants that handle routine customer enquiries in the front office roles, to predictive maintenance that allows maintenance teams to fix machines before they fail in the back office.

- Workforce rebalancing to reduce inefficient and time-intensive processes and redirect human capital into higher-value and high-growth areas like R&D and customer-centric innovation.

- This can shift people from inefficient and time-intensive tasks, to more strategic roles, like moving from manual data entry to data science, or basic compliance checks to developing new risk strategy frameworks.

Realising the benefits of these opportunities requires adoption of AI across an organisation at scale and a strategy for continuous improvement. As an organisation’s exposure to AI risks grows and changes, having a meaningful and representative taxonomy within the enterprise risk management framework is an essential starting point to understand and manage AI risks.

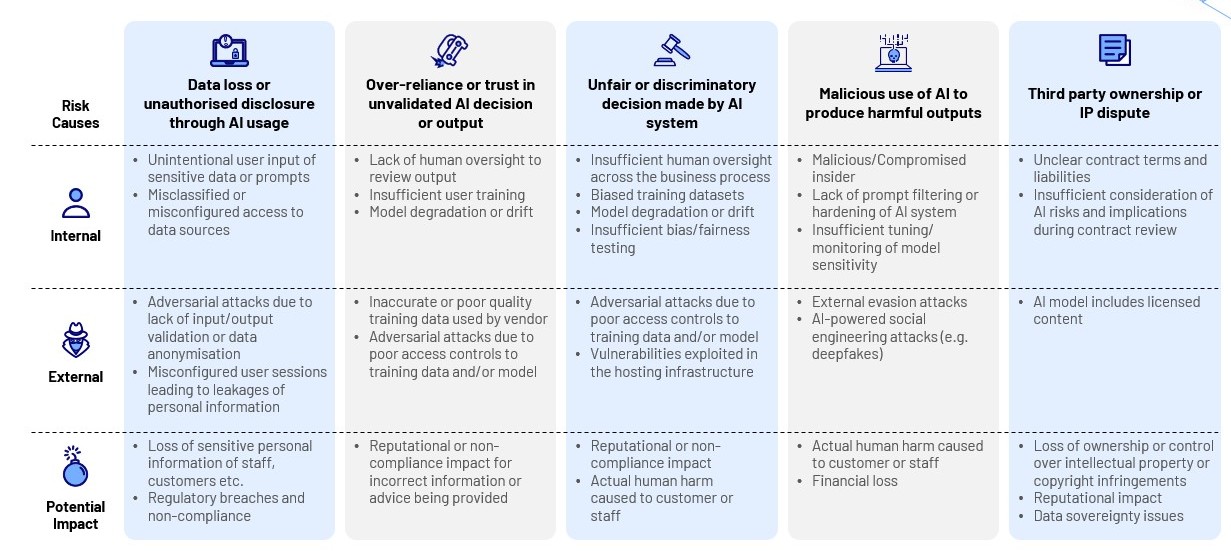

Working with organisations as they start the rollout of their AI journey, CyberCX divides AI-specific risks into five types:

- Data loss or unauthorised disclosure through AI usage

- Over-reliance or trust in unvalidated AI decisions or output

- Unfair or discriminatory decisions made by AI systems

- Malicious use of AI to produce harmful outputs

- Third party ownership or IP disputes.

Key Types of AI Risks

This is not an exhaustive list of what could go wrong with an AI system and new risks are emerging all the time. But these represent the key types of risks that an AI system typically introduces and which can be integrated into an organisation’s existing enterprise risk taxonomy alongside traditional technology and cyber security risks.

Business leaders are rightfully turning their attention to how best to measure these risks and opportunities, including Key Performance Indications (KPI) and Key Risk Indicators (KRI).

Meanwhile, boards are asking about moving beyond ‘pilot purgatory’ and whether the benefits of AI adoption are outweighing the risks.

While every organisation will adopt AI differently to achieve their strategic objectives and, therefore have different risk appetites, generally CyberCX is seeing a focus on use case generation, conversion and adoption – particularly the adoption at various staff levels and business units. CyberCX also sees a greater KRI focus on internal threats rather than external.

Looking forward to the future

As AI adoption grows, managing the risks and opportunities strategically will become a critical success factor for an organisation looking to reap the benefits of AI safely and securely.

We will look deeper into the evolving regulatory landscape, risk mitigation strategies and governance framework in our following blog articles.

Contributing authors: Jarod Inzitari and Amrit Singh